SfM revisited

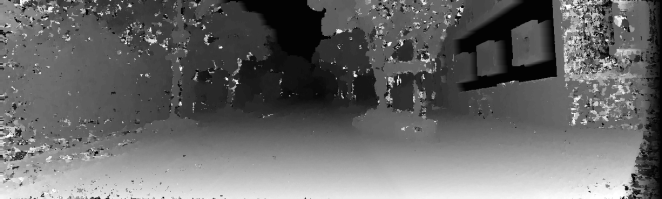

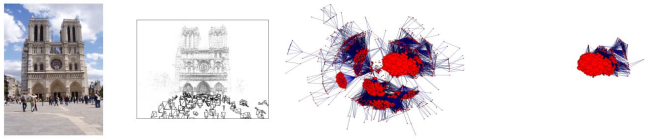

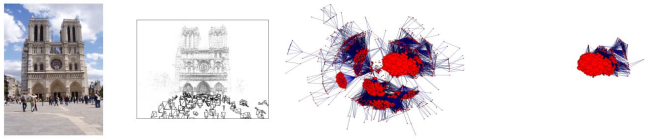

Snavely’s 2007 paper was one of the first breakout pieces of research bringing the power of bundle adjustment and self-calibration of unordered image collections to the community. It paved the way for the use of SfM in many other contexts, but I always appreciated how simple and focused the piece of work was, and how well explained each step in the process is.

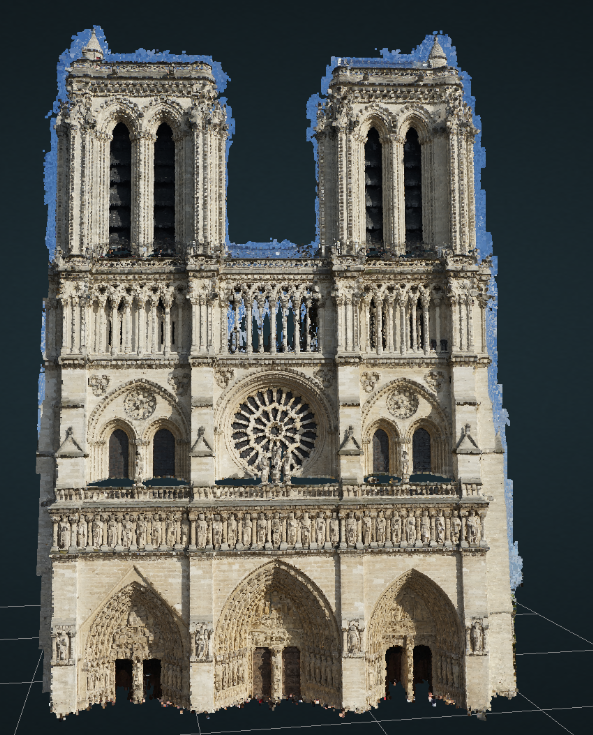

Reconstruction of Notre Dame from Snavely’s paper

For this contribution, I had hoped to try and recreate a figure from this paper, in which the front facade of the Notre Dame cathedral was reconstructed from internet images. I spent last weekend in Paris, so I decided I’d give a go at collecting my own images and pulling them together into a comparable model.

Whilst the doors of the cathedral were not successfully included due to the hordes of tourists in each image, the final model came out OK, and is view-able on my website here.

HDR stacking

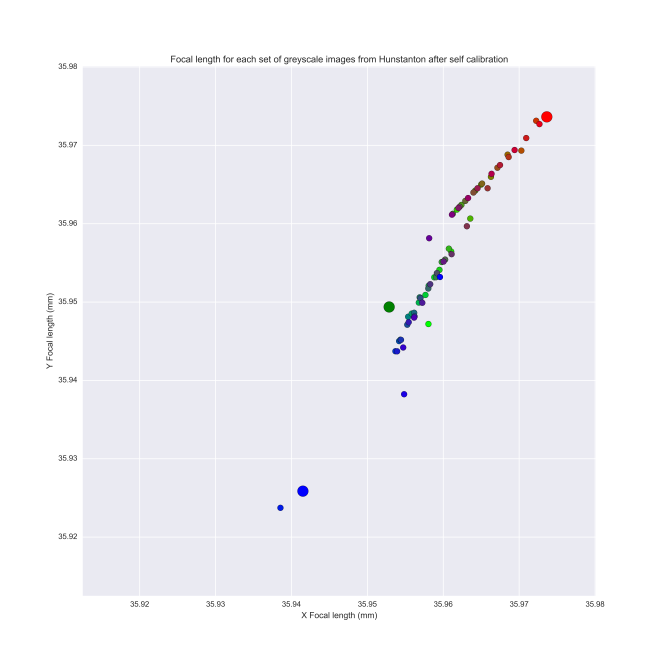

As a second mini-experiment, I thought I’d see how a HDR stack compared with a single exposure from my A7. The dynamic range of the A7, shooting from a tripod at ISO 50 is around 14EV stops, so I wasn’t expecting a huge amount of dynamic range to be outside this, though potentially parts of the windows could be retrieved. For the experiment, I used both Hugin‘s HDR functionality and a custom python script using openCV bindings for generating HDR images which can be downloaded here.

Results were varied, with really only Merten’s method of HDR generation showing any notable improvement on the original input.

This slideshow requires JavaScript.

Some interesting things happened, including Hugin’s alignment algorithm misaligning the image (or miscalculating the lens distortion) to create a bowed out facade by default, pretty interesting to see! I believe, reading Robertson’s paper, his method was generated more to be used on grayscale images rather than full colour, but thought I’d leave the funky result in for completeness.

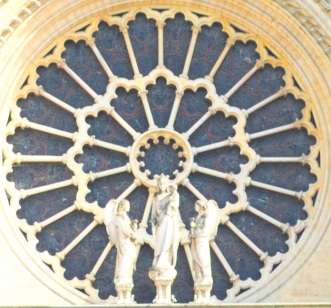

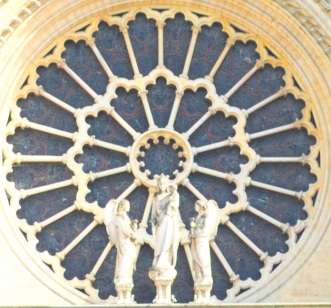

If we crop into the middle stain glass we can see some of the fine detail the HDR stacks might be picking up in comparison to the original JPG.

This slideshow requires JavaScript.

We can see a lot of the finer detail of the famous stained-glass windows revealed by Merten’s HDR method, which is very cool to see! I’m impressed with just how big the difference is between it and the default off-camera JPG.

Looking at the raw file from the middle exposure, much of the detail of the stain glass is still there, though has been clipped in the on-camera JPG processing.

Original image processed from RAW and contrast boosted showing fine detail on stained glass

It justifies many of the lines of reasoning I’ve presented in the last few contributions on image compression, as these fine details can often reveal features of interest.

I had actually planned to present the results from a different experiment first, though will be returning to that in a later blog post as it requires much more explanation and data processing, watch this space for future contributions from Paris!