I went to Mat Disney’s inaugural lecture at UCL last Tuesday. Mat was (is?) the course coordinator for the Masters in Remote Sensing at UCL, and was reflecting over his career, how he got to where he is and what the future might hold. I really enjoyed it, as there’s often a veil of mystery over senior academics. I’ll summarise the core points as they’re definitely of interest to wider audiences!

Trees are great

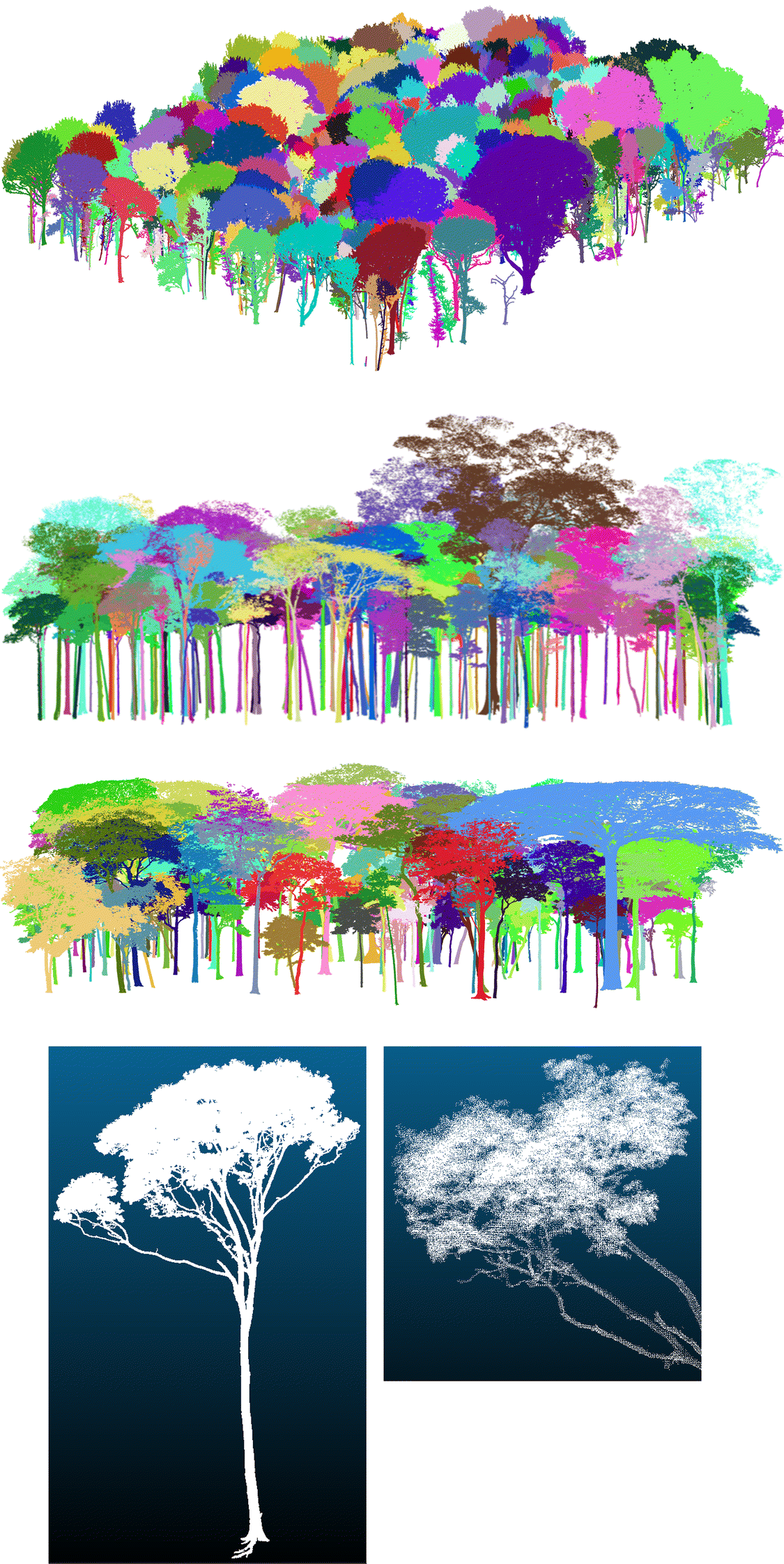

Taken from Mat’s blog

One of the first ports of call was just a general discussion around trees. Their great diversity is worth celebrating, from the tall (up to 120m!) redwoods of west coast of the US to stumpy flat trees on the sides of windswept valleys, our scientific understanding of trees may be (and we will find out later, is) limited, but appreciating them as an amazing organism is worth doing in the first instance.

But trees are hard to weigh

Carbon estimates for trees are a crucial input to models driving climate change predictions, and Mat succinctly summarised the major gaps in knowledge associated with them. Firstly, to get a real measurement of the amount of carbon stored in a tree, you have no choice but to chop it down and weigh it. It’s a huge and grueling effort to do, so it’s no wonder that only 4,000 or so trees had been felled in tropical forests in 2015 – the extrapolation of which gives our estimates for the amount of carbon in tropical forests. Obviously, this has a huge implication for accuracy within these models, as the sample size and diversity of the sample is miniscule when scaled globally. Even in the UK, where you would expect the measurements to be more refined than the harsh environments of the tropics, we found out that carbon estimates in the UK are based upon a sample of 60 or so trees from a paper written in the late 60s, and a simple linear relationship used to extrapolate to he whole of the UK! in data science, we make lots of assumptions, but this is up there as a massive howler. So how can we hope to get more ground truth?

Lasers can weigh them

Image from Mat’s blog

Enter our hero, the reigl laser scanner, which has gone on tours of tropical forests across the globe, taking 3D images of trees to artificially weigh them where they stand. Mat has used these 3D images to redefine the principals of allometry – the science of relative size of measurements (such as brain size vs weight) – when it comes to trees. He reveals that allometric relationships underestimate carbon in tropical forests by as much as 20 %! In the UK, he revisited the 60 or odd samples off which all UK forestry estimates are based, and showed that these estimates are as far off as 120 %! These are really incredible figures that show how far wrong we’ve been going so far.

From space?

The GEDI (recently launched LiDAR) and BIOMASS (PolInSAR) missions are hoping to make the modelling of these ground truth data being recorded by the like’s of Mat to satellite data much tighter, which will hopefully vastly increase our ability to estimate carbon stores in tropical forestry. This, combined with the clear communication of Mat’s methods and the distinct gap in knowledge, make it very important and interesting research!

Lastly, I’d like to give a big congratulations to Mat on the chair, it was well earned!