Two years ago I agreed to join the committee of a professional body known as the Remote Sensing and Photogrammetry Society (RSPSoc), a professional body whose remit is to promote and educate its members and the public on advancements in Remote Sensing Science. When I signed up to join as the Wavelength representative, I admittedly knew very little about not only how this society operated, but societies in general, and what their function was in the greater scope of progress of Science. I took on the role knowing I’d have to learn fast, and, after a two year lead period, host a conference focusing on Remote Sensing and Photogrammetry, which would serve to bring early career researchers from both academia and industry together to discuss the latest advancements in RSP Science.

The first Wavelength conference I attended way back in 2015 was at Newcastle, a few months after my first conference experience at the 2014 GRSG meeting in London, just two months after starting my project.

The difference was apparent, with the GRSG attracting the old guard from all over the world to contribute to the conference. I distinctly remember Nigel Press, a veteran Remote Sensor and founder of NPA satellite mapping, turning around to the crowd during a Q and A session pleading with people to start taking risks funding/supporting hyperspectral satellite missions, as their contributions to geological research was so apparent. I didn’t mention it in my write up from that conference, but it really stuck with me as, at least for that minute, it all seemed so human. But apart from that, it was all quite formal and difficult to tell how I, as a novice, could really play a part.

With Wavelength, however, this humanity is what it’s all about! When everyone’s a novice, you can afford to be a bit more gung-ho with your opinions. As someone who tries to always ask, or at least dream up, a question during Q and A portions of talks, I loved it so much. Rich bluesky discussions have kept me motivated around the inevitable slower portions of writing and finicky data processing of my project, and Wavelength had them in buckets! The fact that I got so much out of it was part of my reason for volunteering to host it, as I felt like it would be a way for me to contribute back to the community, and get more involved in RSPSoc.

After an extremely enjoyable and well-run conference at MSSL during the spring of 2016, it was up to me to deliver a conference in Kingston in March 2017, while coordinating the final run in to my PhD project. While things could definitely have been done better, and I should have maybe been a bit more ruthless about advertising the conference to a wider audience, I have to say I think it ran quite smoothly, and the delegates got a lot out of it, as did I! I’ll include a summary of each day below, and my favourite parts throughout the three day agenda, including a longer description of one delegate presentation.

Monday 13th March

Delegates arrived at Kingston train station at around 11.30 am. I had enlisted the help of my colleague Paddy to go and meet the delegates, as I had to run up the poster boards to the conference room. After lunch and a quick roll call, things kicked off with 6 talks spanning image processing and Remote Sensing of vegetation.

Andrew Cunliffe, eventual winner of best speaker, showed some captivating UAV footage of Qikiqtaruk, a site where arctic ecology is being furtively researched to try to gain insight into differences between observations at different scales, both the changing ecological and geomorphological landscapes. I was interested in his hesitance in saying what he was doing for UAVs was not ‘ground truthing’ of satellite images, but more ‘evaluation’ thereof, as ground truth was never really acquired (outside of GCPs for a few of the 3D models). You can check out his profile on google scholar, which lists some pretty interesting research!

Monday wrapped up with a meal at a local Thai food restaurant, the Cocoanut, a staple with the Kingston Research folk!

Tuesday 14th March

After a tour of Kingston’s town centre in the morning, we returned to the conference venue to listen to Alastair Graham, of geoger fame, give an insightful and extremely helpful talk about career options for Remote Sensing scientists. I felt really lucky to have had the opportunity to host him – truth be told it was a bit of a fluke we crossed paths at all! He had been retweeting some of the tweets from the @sentinel_bot twitter account I had made, which caused me to look at his twitter and subsequently his website. Realising he was organising an RS meeting in Oxford the month before Wavelength (Rasters Revealed), I jumped at the chance to get him onboard, and I’m glad I did! I won’t go into his use of sli.do, but only mention that it’s worth looking into.

On Tuesday, James Brennan’s talk about the next generation of MODIS burnt area products brought me back to my Masters’ days at UCL, and my time spent with the JRCTIP products. James’ talk was focused on the binary nature of classification, and how he was looking into using a DCT to model behaviours of fires, something like a fuzzy land classification. It was really engaging and I enjoyed his super-relaxed style of presenting.

Tom Huntley of Geoxphere also came in to give us a talk on recent advancements with their spinout hardware company, which provides high quality cameras for mapping purposes: the XCam series. Wavelength tries to bridge the gap between industry and acamemia, and both Tom and Alastair’s talk brought in the industry element I was hoping for.

After a nice meal at Strada Kingston, we hit the bowling alley before wrapping up day 2.

Wednesday 15th March

Wednesday’s session opened with delegates talking about mainly data processing. Ed Williamson, from the Centre for Environmental Data Analysis (CEDA) gave a very interesting introduction into the supercomputing facilities they provide (JASMIN), as well as services offered to clients choosing to avail of these services. They host the entire Sentinel catalogue, which is such an outrageous amount of data, and so it was interesting to be given a whirlwind tour of how this is even possible, practically speaking.

We also had the pleasure of listening to José Gómez-Dans from NCEO talk to us about integrating multiple data sources into a consistent estimation of land surface parameters using advanced data assimilation techniques. I had done my Masters’ thesis with Jóse, and (somewhat) fondly remember trying to interpret charts where the error bars couldn’t even be plotted in any reasonable way on them. This is the reality of EO though, uncertainty is part and parcel of it!

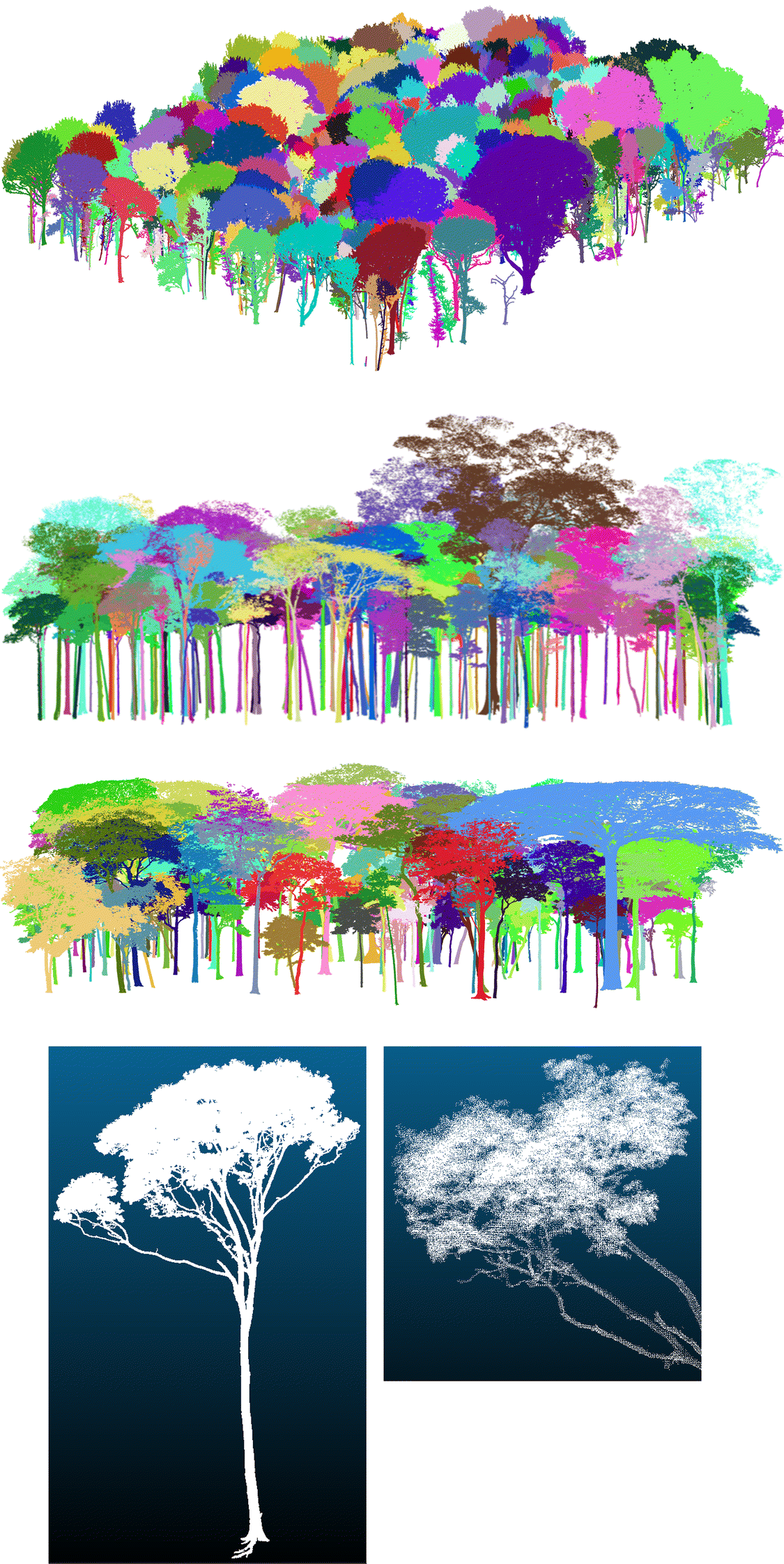

The poster session featured a wide range of topics, I even put up my one from EGU last year, and participants were extremely interested in drought mapping in Uganda, as well as numerous uses for InSAR data presented. Congrats to Christine Bischoff for winning the best poster award with her investigations of ground deformation in London.

Proceedings wrapped up with deciding on the next incoming Wavlength host (congrats to Luigi Parente, of Loughborough Uni) and a lovely lunch in the sun.

Summary

Wavelength was really fun and interesting to organise, and I hope it’s a tradition we can keep going as a society. I’ve made the conference booklet publicly available here. For those of you who might be reading this blog and aren’t members I suggest you join, the benefits are evident.

For now, for me, it’s EGU and beyond – I’m also aiming to attend the annual RSPSoc conference in Imperial in September with latest developments from my fieldwork data!